(4.9TB of CDN traffic last month) / (4.3GB to download all of the models) ≈ 1140 LibreTranslate instances deployed last month.

About ~100 users / day visit libretranslate.com.

To update these numbers there were 9.2 TB of traffic to the CDN last month. At ~7GB to download all models and the majority of the traffic likely coming from LibreTranslate this implies ~1300 LibreTranslate instances created last month.

That’s quite a bit! Are the bandwidth charges from DO reasonable or do we need to study a better distribution model (or perhaps just help offset the CDN costs?). Just want to make sure this is sustainable and that the project is not putting a high cost on model hosting.

it may be necessary to check something but I have the impression that with each test on the github models are downloaded because pytest takes more than 3min while on my pc it only takes 10 seconds (I hope not all!)

EDIT: translate seem work for github actions, Run test for pull requests, add some functional tests · LibreTranslate/LibreTranslate@97ff47b · GitHub at last line in pytest

tests/test_api/test_api_translate.py::test_api_detect_language PASSED [100%]

I forgot to change the name of the function but it is the translation

I’m spending ~$100/mo on DigitalOcean to host the CDN which isn’t a problem but hosting could become more expensive in the future. If hosting costs become an issue we can look at lower cost providers.

Donations and using my DigitalOcean referral link are also appreciated, if people want to host a LibreTranslate instance on DigitalOcean they can offset the cost of the CDN by signing up with the referral link.

I also publish peer to peer links for the models so they are always available as long as someone is seeding.

A cheaper option (that doesn’t offer a CDN however) could be to setup a server with some disk space (Dedicated Root Server Hosting - Hetzner Online GmbH) and just a static nginx web server.

I’m also eagerly waiting for cloudflare to open the beta to R2: https://developers.cloudflare.com/r2/ which promises to reduce storage costs of static files by quite a bit.

I guess I should ask: perhaps I could setup a mirror for the CDN, so that LibreTranslate can point to that mirror instead of downloading from the argos repository, thus reducing the egress load? Then a simple rclone script can keep the mirror in sync.

I will look at to see if its possible to cache models for github actions because if they are downloaded for each tests its annoying

Thanks for the ideas, I don’t mind LibreTranslate users using the Argos Translate CDN and am able to pay for it for now. If costs increase in the future it seems like we have a lot of options to try to reduce them, we’ve already changed CDNs a few times without major issues Google Drive → GitHub repo → Google Cloud → Digital Ocean.

Ideally to decentralize things in the future we would have multiple users training and hosting their own models so that there isn’t one CDN hosting the whole ecosystem. It should probably be documented better but the ARGOS_PACKAGE_INDEX environment variable can be used to set a custom index.

I’m getting close on my da to en Europarl. Figured out what the size field in metadata meant  Just need to get this Ubuntu installed on my PC to try actually training it.

Just need to get this Ubuntu installed on my PC to try actually training it.

I just have one of the smaller droplet options currently, just for playing around and my website (which is also just for playing around/testing). I host my email elsewhere which is the main reason I have a domain at all.

I’d definitely be willing to upgrade the droplet (it’s currently one of the ones around 20USD, I forget specs) and host models or any other useful help.

Any way to share keys? Like I could have my libretranslate server, key required, but have them still pay LibreTranslate the $9

Still have to finish training myself to train on the da-en one, but wasn’t sure what I do with it once I get there… I figured since they’re just links in the JSON file, I’d toss it up on my server and say “hey, here’s a Danish to English if you want…”

Data hosting is definitely appreciated thanks! If you have .argosdata packages you’re willing to host we can point the data-index.json links to your instance.

I don’t know of a way to share keys but if you’re willing to host a public LibreTranslate instance there is a lot of demand for mirrors.

Well, I have this I haven’t trained yet - https://fortytwo-it.com/argos/data-europarl-da_en.argosdata

But I could download other current ones into there to host as well.

Do you have a good systemd or init.d script for libretranslate? I figured it’d be like a start-stop-daemon thing. But when I first used this on an external server for the websites I was trying to translate I only got close. I could start/stop/restart, but it was ignoring command line arguments.

And while it isn’t overly important - http:fortytwo-it.com:5000 works, but https://fortytwo-it.com:5000 does not, I did add the --ssl argument.

Again, probably need to upgrade my droplet a few notches, but certainly willing to take some of the load.

Running across this got me back into it. I use a lot of free/open source stuff, but haven’t done much contributing back since maintaining a few Debian packages years ago. But first making my own simple PHP class (just /translate really) got me thinking if I make this more complete, other people might like to use it. And wanting Danish to English got me looking into all the githubs and MD files as to how can I make a da-en myself… and if I can, then others might want that too…

Thanks for contributing, and glad you were able to figure out making your own data packages.

I publish the LibreTranslate-init scripts which are LibreTranslate setup scripts for Ubuntu.

Currently the data server I’m using has plenty of capacity but if you want to mirror the data packages data-index.json supports lists of links so we can have multiple backup links. The CDN for .argosmodel translation packages has much more load and you could also mirror that but by default Argos Translate will just use the first link so it won’t take much of the load (it’s still good to have backups though). You can also seed the peer to peer links.

Got a few things so far:

Still having issue making it https://. Tried LT_SSL=True in my systemd file

And maybe need to figure out Apache proxypass so that http://translate.fortytwo-it.com goes to libretranslate server page also.

https://libretranslate.fortytwo-it.com/

Can pretty that up later  But do have dowloaded all the argosdata files

But do have dowloaded all the argosdata files

Probably next figure out/setup ipfs and get/seed models.

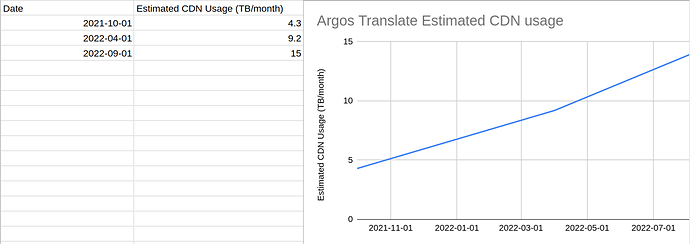

To update this thread I’ve setup two new CDN servers with time4vps which is cheaper provider than Digital Ocean. The usage has also continued to increase and I think people are now downloading ~15 TB a month of Argos Translate models.

| Date | Estimated CDN Usage (TB/month) |

|---|---|

| 2021-10-01 | 4.3 |

| 2022-04-01 | 9.2 |

| 2022-09-01 | 15 |