I think combing language models with robotics is a very promising area and something I’m exploring with Argos Translate. By using one neural net for language tasks like machine translation and robotics task the robot is able to understand natural Human languages and act accordingly in the physical world.

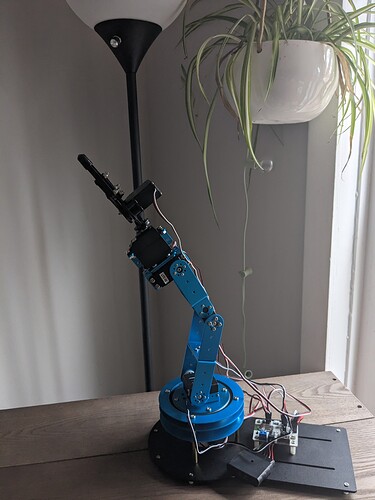

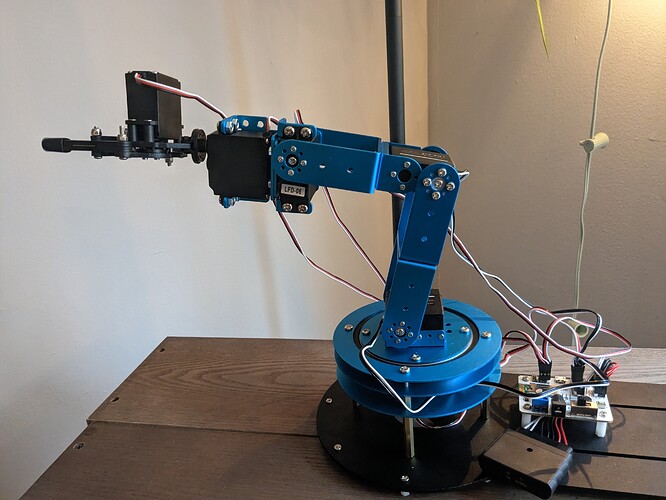

I bought and assembled a HiWonder Robotic Arm that I’m working on controlling with Argos Translate.

I don’t think this is immediately practical but I’m an electronics noob and this is a good way to improve my skills.

The idea is to embed the sensor data as text, including camera images, and then “translate” this source text to a target text string in JSON format to give control commands to the arm. I don’t think this would be as efficient as the algorithm described in the above paper but it would let me use one software pipeline for both NLP and robotics.