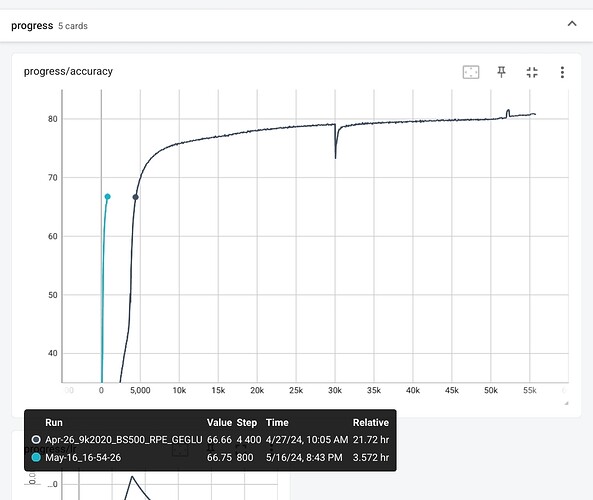

Gelu activated model is underperforming relu on automated metrics, much as the one with modified label smoothing, or bigger lexica. Still have to check why : for instance, I found label_smoothing = 0.11 to yield somewhat sharper wording (than reference translations too, thus the automated score was not so good…).

Also finished training a model with Shaw20 (RPE) position encoding. Although training data is the best obtained so far, automated metrics do not show such an improvement (COMET is up 0.0003, BLEU is either down or at par). I’ll also have to check why for myself.

And I reread the Shazeer article introducing gated activation functions, and they actually reduce, not increase, the ff layer size to keep parameters’ number constant across the whole transformer.

We use the same code base, model architecture, and training task as the base model from [Raffel et al., 2019]. The encoder and decoder each consist of 12 layers, with dmodel = 768. For the attention layers, h = 12 and dk = dv = 64. The FFN layers have hidden size df f = 3072. As we describe above, for the GLU-variant-based FFN layers, which have thee weight matrices instead of two, we reduce the hidden layer to df f = 2048, so as to maintain the same parameter and operation counts as the base model.

Since we use dmodel = 512, we simply cannot downsize the ff size by 2/3, so I simply started training with the same ff this morning. And I used “silu” actuvation, which is actually a “SwiGLU” in Open-NMT. According to Shazeer, it should yield >95% of the “gated-gelu” activation’s results, and spares the trouble of having to tweak dependencies.

All in all, the model with 18 encoder layers and 6 decoder layers has 199M parameters, against 278 if you increase ff to 6144. I’ll check the relation between ff size and quality later, switching fundamental parameters may have altered it pretty much.

As of tweaking, a PR is due in Locomotive to allow for activation and position encoding to be treated normally with config.json file. Before that, one has to check non-regression when using default values (since we had to uncomment “max_relative_positions” in train.py).