From what I see:

Validation perplexity: 41.6536- you should focus on this parameter. The lower the better. Below 12 is already good.- Everything that is not explicitly written in config.json - the default parameters are taken from the

train.pyfile - Apparently, you are training on one GPU, which means the effective batch size = 188192 = 65536 (in train.py:

'batch_size': 8192, 'accum_count': 8,)

Personally, in my experience, a larger batch size increases the quality of the model, but with diminishing returns. For Transformer BASE models, I got the maximum quality with an effective batch size of 200k (in your case, you can set'accum_count': 24). - With an effective batch size of 200k and a large dataset (more than 50M pairs of sentences), 70-100k training steps are usually sufficient. At 65k - I think about 200k steps.

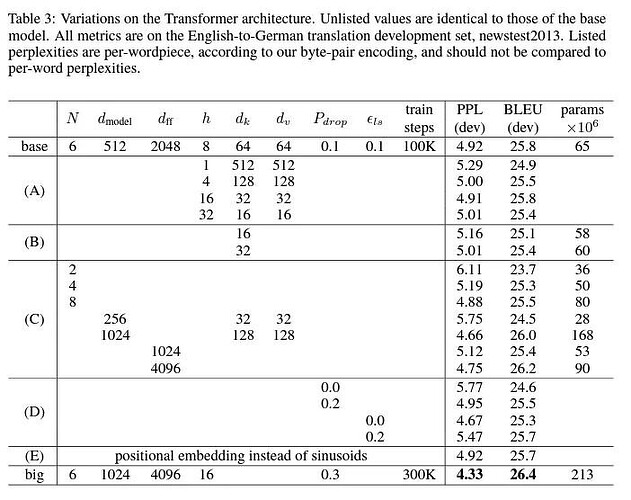

- You can also increase the size of the model itself (if the dataset is large enough and of high quality, this makes sense):

'transformer_ff': 4096 - increases the ff layer, judging by the preprints of the articles and my observations, it gives the greatest quality gain relative to increasing the size of the model.

'enc_layers': 20 increases the number of encoder layers, together with increasing the ff layer gives the greatest gain in quality.

I provided the calculator and parameters in this post:

If you increase the size of the model, do not forget to use ‘batch_size’ and ‘accum_count’ to set the effective batch size so that everything fits in your VRAM.