With the rise of a new open-source and cheap LLM, DeepSeek, I’ve started to wonder how applicable it may be to fixing src - tgt alignment between translations.

I do not trust LLMs to have the ability to complete the translation themselves, but provided with an incorrect or misaligned one, I do think an LLMs experience with language can correct un-natural over/under/mis translations, as well as decide when it doesn’t know how to fix something.

This will no doubt only be testable and functional with high-resource languages, the biggest benefit obviously being Chinese and English, then descending down to other high res languages.

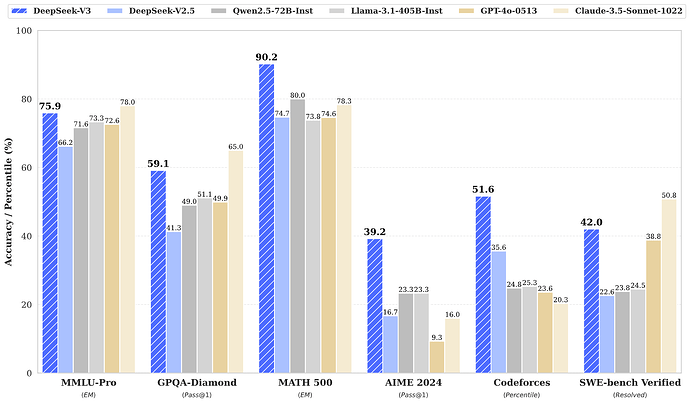

To correct a million sentences (assuming avg of 50 chars per translation, and that a token is 3 chars) it would cost around $7 (with deepseek v-3 API), as opposed to around $166 with the chatgpt-4o that is similar in performance (according to their repo)

1 Like

That’s an interesting use case; I wonder in terms of speed how long it would take to correct a few millions sentences.

I’m going to test this in a two-stage training plan. CCMatrix data which I’ll do all my normal filtering with first (some perplexity filtering, sentence similarity, length comparisons, normalization). Train a model until it plateaus, then take half of the data and feed it through deepseek (around 7m sentences).

My models are english centric multilingual models, where the xx - en and en - xx data is the same just reversed. So I think I’ll take half of an xx - en dataset, and further split that up in half so that deepseek corrects the first portion in an en - xx manner (deepseek fixing xx), and the second half will have deepseek fixing xx - en (deepseek fixing en).

Then just train the model on only this deepseek corrected data and compare the results.

1 Like

I’ve been experimenting with this and so far the returned results from deepseek have been good. Obviously now prompt engineering is a big part of the result, but I think I have a good one right now.

It takes around 1 hour and a half to correct 65,000 sentences, I’m using deepseek’s own API, which is known to be less reliable than other model hostsa out there. In the examples I’ve looked at, the results were promising, and I asked some natives for input (for en-ru) and they said the corrections were good in the examples shown.

The total cost seems to have changed, I think the API prices were adjusted, but as of right now the average cost per sentence is around 0.00747345 cents ($0.0000747345). Prompt could probably be decreased, but as of right now it’s around $75 to correct 1,000,000 sentences.

As a result of this, I’ve finished the model’s general training stage and will now fine-tune with some proprietary cleaned datasets combined with 65,000 cleaned deepseek sentences.

One thing that should be kept in mind is the LLM is instructed to only correct/nativize the target side. As a result, a messy src sentence will map to a correct (as much as possible) target, reversing this data to go from Y-X could cause the model to learn to map clean sentences to messy translations, thus the correction, if training a bilingual model, needs to be done to both sides.

1 Like

I was going to ask, how accurate are the corrections? I’m my testing of OpenAI’s ChatGPT, I noticed that while it generally translates decently, it makes a lot of subtle mistakes especially as sentence length increases. I look forward to hear if the method increases model accuracy. Very interesting stuff.

1 Like

The corrections are pretty on point. They correct a bunch of subtle mistakes. However, I don’t speak Russian, so I can’t analyze the gist of the corrections until I work on Spanish next.

But based on the feedback I’ve asked some native speakers, it does really well in correcting the awkwardness, but still gives in to relay things in a machine-manner sometimes (I think this is due to the prompt strictness).

I’ll fine-tune and analyze the difference in BLEU score against the flores200 dataset.

1 Like