Today morning i tested the demo API and i noticed that some translations between italian and german are not accurate at all.

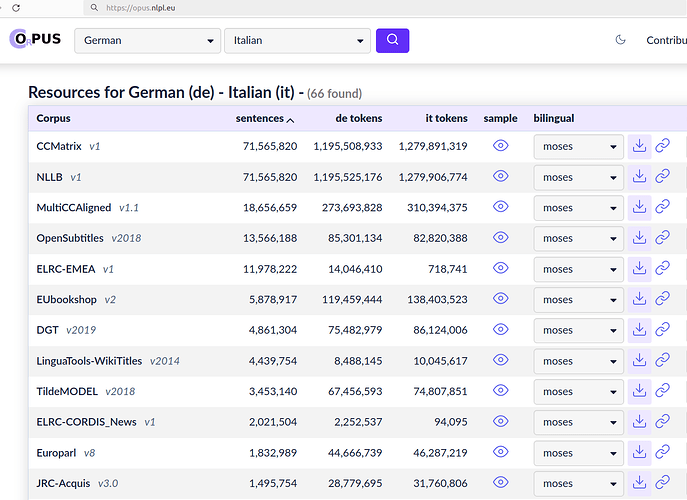

I was wondering whether i can re-train by the parallel corpus JRC-Acquis (Index of JRC-Acquis/alignments) or similar datasets so that to improve a little the precision.

I saw you described how to re-train the model by “locomotive”,

i do have a couple of questions in this regard:

-

is it only matter of quantity? are we going to improve the accuracy just by adding “examples”?

-

do you have tracked the trainingsets that have been used to train the current production-model?

-

it is not clear to me whether the re-train is cumulative or not. i mean if I want to add some “examples” do we have to include again in “sources” all the datasets used so far or not?

many thanks,

Simone