I have a problem. Please help me solve it.

Sources: 1

- file://D:/Train Model/Wiki/Locomotive-main/argosdata_en_th (hash:d0ba80f)

Downloading https://raw.githubusercontent.com/stanfordnlp/stanza-resources/master/resources_1.1.0.json: 122kB [00:00, 43.3MB/s]

2024-02-08 14:56:11 INFO: Downloading these customized packages for language: en (English)…

=======================

| Processor | Package |

| tokenize | ewt |

Downloading http://nlp.stanford.edu/software/stanza/1.1.0/en/tokenize/ewt.pt: 100%|██| 631k/631k [00:00<00:00, 672kB/s]

2024-02-08 14:56:15 INFO: Finished downloading models and saved to D:\Train Model\Wiki\Locomotive-main\run\en_th-1.0\stanza.

No sources merged

sentencepiece_trainer.cc(77) LOG(INFO) Starts training with :

trainer_spec {

input_format:

model_prefix: D:\Train Model\Wiki\Locomotive-main\run\en_th-1.0/sentencepiece

model_type: UNIGRAM

vocab_size: 30000

self_test_sample_size: 0

character_coverage: 1

input_sentence_size: 1000000

shuffle_input_sentence: 1

seed_sentencepiece_size: 1000000

shrinking_factor: 0.75

max_sentence_length: 4192

num_threads: 16

num_sub_iterations: 2

max_sentencepiece_length: 16

split_by_unicode_script: 1

split_by_number: 1

split_by_whitespace: 1

split_digits: 0

pretokenization_delimiter:

treat_whitespace_as_suffix: 0

allow_whitespace_only_pieces: 0

required_chars:

byte_fallback: 0

vocabulary_output_piece_score: 1

train_extremely_large_corpus: 0

hard_vocab_limit: 1

use_all_vocab: 0

unk_id: 0

bos_id: 1

eos_id: 2

pad_id: -1

unk_piece:

bos_piece:

eos_piece:

pad_piece:

unk_surface: โ

enable_differential_privacy: 0

differential_privacy_noise_level: 0

differential_privacy_clipping_threshold: 0

}

normalizer_spec {

name: nmt_nfkc

add_dummy_prefix: 1

remove_extra_whitespaces: 1

escape_whitespaces: 1

normalization_rule_tsv:

}

denormalizer_spec {}

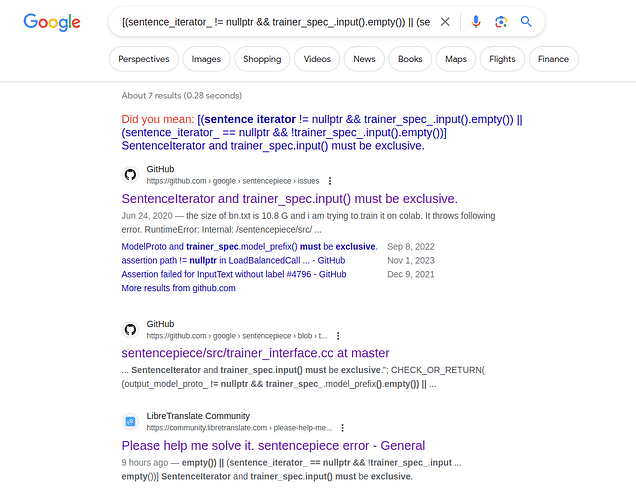

Internal: D:\a\sentencepiece\sentencepiece\src\trainer_interface.cc(332) [(sentence_iterator_ != nullptr && trainer_spec_.input().empty()) || (sentence_iterator_ == nullptr && !trainer_spec_.input().empty())] SentenceIterator and trainer_spec.input() must be exclusive.

PS D:\Train Model\Wiki\Locomotive-main> python train.py --config config.json

Training English → Thai (1.0)

Sources: 1

- file://D:/Train Model/Wiki/Locomotive-main/argosdata_en_th (hash:d0ba80f)

No changes in sources

sentencepiece_trainer.cc(77) LOG(INFO) Starts training with :

trainer_spec {

input_format:

model_prefix: D:\Train Model\Wiki\Locomotive-main\run\en_th-1.0/sentencepiece

model_type: UNIGRAM

vocab_size: 30000

self_test_sample_size: 0

character_coverage: 1

input_sentence_size: 1000000

shuffle_input_sentence: 1

seed_sentencepiece_size: 1000000

shrinking_factor: 0.75

max_sentence_length: 4192

num_threads: 16

num_sub_iterations: 2

max_sentencepiece_length: 16

split_by_unicode_script: 1

split_by_number: 1

split_by_whitespace: 1

split_digits: 0

pretokenization_delimiter:

treat_whitespace_as_suffix: 0

allow_whitespace_only_pieces: 0

required_chars:

byte_fallback: 0

vocabulary_output_piece_score: 1

train_extremely_large_corpus: 0

hard_vocab_limit: 1

use_all_vocab: 0

unk_id: 0

bos_id: 1

eos_id: 2

pad_id: -1

unk_piece:

bos_piece:

eos_piece:

pad_piece:

unk_surface: โ

enable_differential_privacy: 0

differential_privacy_noise_level: 0

differential_privacy_clipping_threshold: 0

}

normalizer_spec {

name: nmt_nfkc

add_dummy_prefix: 1

remove_extra_whitespaces: 1

escape_whitespaces: 1

normalization_rule_tsv:

}

denormalizer_spec {}

Internal: D:\a\sentencepiece\sentencepiece\src\trainer_interface.cc(332) [(sentence_iterator_ != nullptr && trainer_spec_.input().empty()) || (sentence_iterator_ == nullptr && !trainer_spec_.input().empty())] SentenceIterator and trainer_spec.input() must be exclusive.