Thought this was a good summary: 12 Critical Flaws of BLEU - Benjamin Marie's Blog

I’ve found BLEU scores are difficult to use for comparing machine translation systems because different BLEU benchmarks seem to not be very consistent. Maybe if you have a single benchmark methodology and dataset you could get a useful comparison but even then BLEU scores only loosely correlate with ratings from Human translators.

For training the Argos Translate models I haven’t used BLEU scores extensively. Instead I’ve focused on selecting high quality data and evaluated the trained models manually. I only speak English well so I test the x->en models by translating a Wikipedia article in language x to English. And I test the en->x models by translating English text to language x and then translating back to English with my models or Google Translate.

In my experience when model training goes poorly or overfits somehow it’s normally pretty obvious even if you don’t speak the language. For example, it will always return a translation of an empty string or it will always return the source text as the translation.

I’ve been finding that the reference translations in benchmark datasets like flores200 are sometimes not exactly great. That’s because of missing context or sometimes even just preference. Also as it’s pointed in the article, the smallest errors sometimes cause the biggest mistakes, e.g. a translation can deviate significantly in verbage compared to the reference and still be very correct, while another can closely match the reference expect for one important word, which totally derails the quality.

Hello,

In order to allow for COMET evaluations, I have made some modifications to eval.py

- added an argument “translate_flores” that translates a flores dataset into a file, for further evaluation with COMET (since it takes actual files as arguments)

- added another argument “flores_dataset”, when I realized that the default “dev” dataset is not the one in the OPUS-MT benchmarks (which uses “devtest”).

Actually, BLEUs on flores200-devtest are more in line with published results, so I want to know if

- we should continue to use the dev dataset as default in locomotive’s “data.py”,

- you are interested in the modifications.

If you are, please tell me about the dataset thing, I will wrap all this on github and send a pull request.

- We can switch to devtest, I honestly picked dev over devtest randomly. If other benchmarks use devtest, let’s align to that.

- Yes!

I opened the pull request.

For now, I have kept the “dev” dataset as default, changing it would create a discrepancy with the data.py module where it is also default

Also, I may have cracked COMET-22 score automation in eval.py, still have to test it.

Though, because the protobuf dependency regressed to version 4 upon installing unbabel-comet, I think we should add the comet dependancy to Locomotive’s requirements.txt to make sure everything works fine together…

Would it be ok for you? We already have sacrebleu after all.

Since I need to open a new instance in the weeks to come, I’ll install it from scratch from my Locomotive fork and open the PR afterwards.

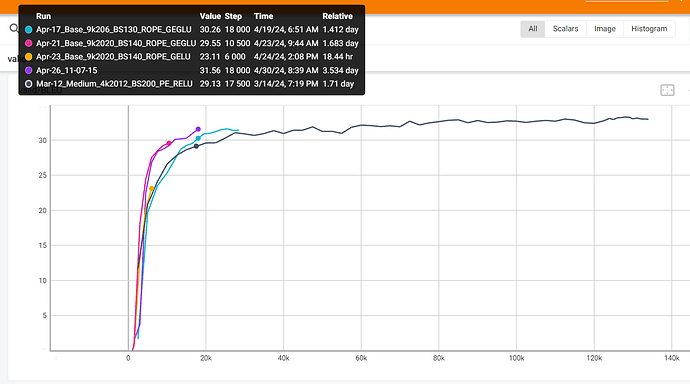

I also think we should have an optional logger in train.py : I consider tensorboard to be nice, but when you need to have a look at your training data retrospectively, plain logs are still a useful artefact. Actually, this might be the next pull request since I have spent quite a good deal of time so far copying checkpoint metrics to an xls file to analyze and compare them afterwards, when a bunch of csv might have suited my purpose better…

I’m open to adding one more dependency, so long as it works on all platforms and it’s not overly bloated (does it pull any other co-dependencies? Which ones?) sacrebleu is fairly lightweight.