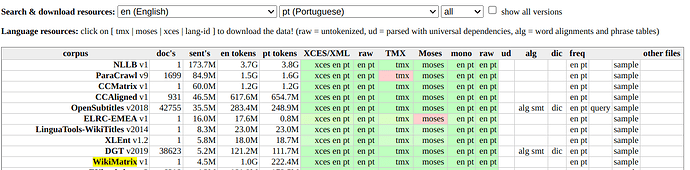

Here’s some translation samples:

en → pt

English Source Text (Wikipedia)

Hector Berlioz (11 December 1803 – 8 March 1869) was a French Romantic composer. His output includes orchestral works such as Harold in Italy, choral pieces including his Requiem and L’enfance du Christ, and works of hybrid genres such as the “dramatic symphony” Roméo et Juliette and the “dramatic legend” La damnation de Faust. Expected to enter medicine, Berlioz defied his family by taking up music, and won the Prix de Rome in 1830. Berlioz married the Irish Shakespearean actress Harriet Smithson, who inspired his first major success, the Symphonie fantastique, in which an idealised depiction of her occurs throughout. His first opera, Benvenuto Cellini, was a failure. The second, the epic Les Troyens, was so large in scale that it was never staged in its entirety during his lifetime. Meeting only occasional success in France as a composer, Berlioz turned to conducting, in which he gained an international reputation. He also wrote musical journalism throughout much of his career.

1.1 (Proposed) Translation

Texto para traduzir de Hector Berlioz (11 de dezembro de 1803 – 8 de março de 1869) foi um compositor francês. Sua saída inclui obras orquestrais como Harold em Itália, peças coral, incluindo seu Requiem e L’enfance du Cristo, e obras de gêneros híbridos como a “fínfoniadramática” Roméo et Juliette e a " lendadramática" La damnation de Faust. Esperava entrar em medicina, Berlioz defiou sua família tomando música e ganhou o Prix de Roma em 1830. Berlioz casou-se com a atriz irlandesa Shakespearean Harriet Smithson, que inspirou seu primeiro grande sucesso, a fantastique Symphonie, na qual ocorre uma representação idealizada de sua atriz. Sua primeira ópera, Benvenuto Cellini, foi uma falha. O segundo, o épico Les Troyens, foi tão grande em escala que nunca foi palco em sua totalidade durante sua vida. Encontro apenas um sucesso ocasional na França como compositor, Berlioz voltou a conduzir, em que ganhou uma reputação internacional. Ele também escreveu jornalismo musical em grande parte de sua carreira.

1.0 (Prod) Translation

Hector Berlioz (11 de dezembro de 1803 - 8 de março de 1869) foi um compositor romântico francês. Sua produção inclui obras orquestrais como Haroldo na Itália, peças corais incluindo sua Requiem e L’enfance du Christ, e obras de gêneros híbridos como a “sinfonia dramática” Roméo et Juliette e a " legenda dramática" La Damnation de Faust. Esperava entrar na medicina, Berlioz desafiou sua família ao tomar música e ganhou o Prix de Roma em 1830. Berlioz casou-se com a atriz irlandesa de Shakespeare Harriet Smithson, que inspirou seu primeiro grande sucesso, a fantasia de Symphonie, na qual uma representação idealizada dela ocorre em toda parte. Sua primeira ópera, Benvenuto Cellini, foi um fracasso. O segundo, o épico Les Troyens, era tão grande em escala que nunca foi encenado em sua totalidade durante sua vida. Reunindo apenas sucesso ocasional na França como compositor, Berlioz virou-se para conduzir, em que ganhou uma reputação internacional. Ele também escreveu jornalismo musical em grande parte de sua carreira.

pt → en

Portuguese Source Text (Wikipedia)

Mohammed Ould Abdel Aziz (Akjoujt, 20 de dezembro de 1956) é um político e foi o 8.º presidente da Mauritânia entre 2009 a 2019.[1] Soldado de carreira e oficial de alta patente, foi destaque durante o golpe em agosto de 2005 que depôs o presidente Maaouya Ould Sid’Ahmed Taya, e liderou o golpe em agosto de 2008, que derrubou o presidente Sidi Ould Cheikh Abdallahi. Após o golpe de 2008, Abdel Aziz tornou-se Presidente do Conselho Superior de Estado como parte do que foi descrito como uma transição política que conduziu a uma nova eleição. Renunciou ao cargo em abril de 2009 para se apresentar como candidato nas eleições presidenciais de julho de 2009, saindo eleito. Foi empossado em 5 de agosto de 2009. Posteriormente, foi reeleito em 2014 e não buscou a reeleição em 2019. Foi sucedido por Mohamed Ould Ghazouani, que assumiu o cargo em 1 de agosto de 2019.

1.1 (Proposed) Translation

Mohammed Ould Abdel Aziz (born December 20, 1956) is a politician, and was the 8th president of Mauritania between 2009 and 2019.[1] High patent career and official soldier, was highlighted during the coup in August 2005 which led President Maaouya Ould Sid’Ahmed Taya, and led the coup in August 2008, which broke down President Sidi Ould Cheikh Abdallahi. After the 2008 coup, Abdel Aziz became President of the Higher Council of State as part of what was described as a political transition that led to a new election. He denounced the position in April 2009 to present himself as a candidate in the presidential elections of July 2009, leaving elected. On 5 August 2009. Subsequently, it was re-elected in 2014 and did not seek re-election in 2019. It was succeeded by Mohamed Ould Ghazouani, who took office on 1 August 2019.

1.0 (Prod) Translation

Mohammed Ould Abdel Aziz (December 20, 1956) is a politician and was the 8th president of Mauritania from 2009 to 2019.[1] High-ranking officer and career soldier, was featured during the coup in August 2005 which deposed President Maaouya Ould Sid’Ahmed Taya, and led the coup in August 2008, which ousted President Sidi Ould. After the 2008 coup, Abdel Aziz became President of the Superior Council of State as part of what was described as a political transition that led to a new election. He resigned in April 2009 to appear as a candidate in the July 2009 presidential election, leaving elected. It was retired on 5 August 2009. He was re-elected in 2014 and did not seek re-election in 2019. It was succeeded by Mohamed Ould Ghazouani, who took office on 1 August 2019.