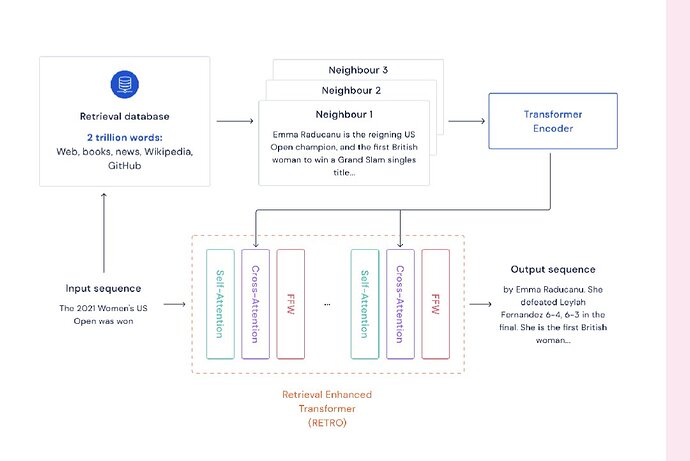

They have a system for including outside information into their model by encoding background information with a Transformer encoder and using it at inference.

Human Parity on CommonsenseQA: Augmenting Self-Attention with External Attention describes a similar approach.

Interesting! Trying to think of the applications for this; is it a sort of smart “autocomplete”? I guess it could be used in places like Gmail for composing e-mails or Android for text messages, etc. (they already do that to some extent).

I think the general idea is to have more background information accessible to the model to generate more relevant text.