Hey all ![]()

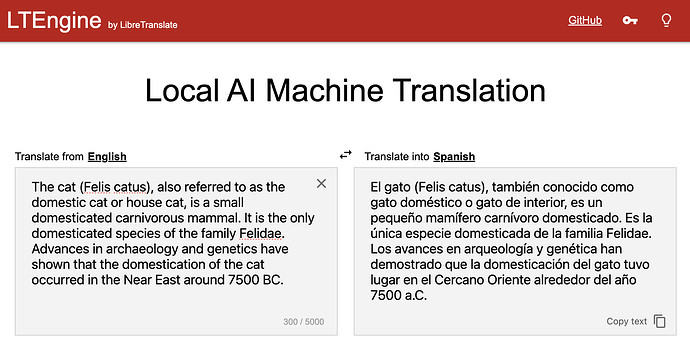

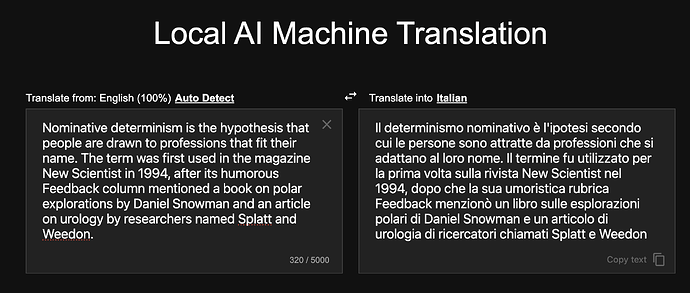

I’m announcing a new project I’ve been working on called LTEngine.

It aims to offer a simple and easy way for running local and offline machine translation via large language models while offering a LibreTranslate API compatible server. It’s currently powered by LLAMA.cpp (via Rust bindings) running a variety of quantized Gemma3 models. The largest model (gemma3-27b) can fit on a consumer RTX 3090 with 24G of VRAM whereas smaller models can still run at decent speeds on a CPU only.

The software compiles to a single, cross-compatible, statically linked binary which includes everything. I’ve currently tested the software on macOS and Windows, will probably check Linux off the list in the upcoming days.

To build and run:

Requirements:

Steps:

git clone https://github.com/LibreTranslate/LTEngine --recursive

cd LTEngine

cargo build [--features cuda,vulkan,metal] --release

./target/release/ltengine

In my preliminary testing for English <=> Italian (which I can evaluate as a native speaker), the 12B and 27B models perform just as good or outperform DeepL on a variety of inputs, but obviously this is not conclusive and I’m releasing this first version early to encourage early feedback and testing.

The main drawback of this project compared to the current implementation of LibreTranslate is speed and memory usage. Since the models are much larger compared to the lightweight transformer models of argos-translate, inference time takes a while and memory requirements are much higher. I don’t think this will replace LibreTranslate, but rather offer a tradeoff between speed and quality. I think it will mostly be deployed in local, closed environments rather than being offered publicly on internet-facing servers.

The project uses the Gemma3 family of LLM models, but people can experiment with other language models like Llama or Qwen, so long as they work with llama.cpp they will work with LTEngine.

Look forward to hear your feedback ![]()